The term “AI,” being the short form for Artificial Intelligence, was first coined in 1956 by John McCarthy, a computer scientist at Stanford University. The concept has been widely popularised by many movies ever since, including The Matrix which also introduced us to the idea of “The Red Pill.”

Artificial Intelligence represents the vision that machines will one day become intelligent and, therefore, be capable of thinking for themselves. Moreover, it is the foundation on which the latest craze for technological utopia is to rest.

I was about twelve years old when I first became enthralled by the idea of a “thinking machine,” and it was a fascination which drew me into computers and programming. You see, the portrayal of AI in the science fiction of my youth was generally a positive one. As the years went by, however, my enthusiasm for it waned. In its place, despite some apparent technological advances, there grew a feeling of vague disappointment—as if my heart could sense there was something wrong, but my mind could not quite put a finger on what it was.

Today, I think I know what it is—the modern realisation of AI bears no resemblance to the dreams I once held for it on so many levels.

For one, the AI with which we are now familiar is nothing of the sort—it does not represent “intelligence” or any meaningful form of artificial thinking or consciousness. It is merely clever programming, or let’s call it “algorithmic engineering.” In itself, there is nothing wrong with “clever programming.” In fact, it could be a fascinating subject, provided we are clear on what we are dealing with and its limitations.

For another, the fictional depictions of AI which so captivated me in childhood generally had one thing in common which is absent today—virtually every scifi robot (such as “R2D2” from Starwars) or spaceship computer (such as “HAL-9000” from the movie 2001 A Space Odyssey) was a unique, independent and autonomous agent which made its own decisions. In other words, these were depictions of machines which arguably had minds of their own. Indeed, that was what I understood the very point of Artificial Intelligence to be, but now regard the terms “mind” and “machine” to be mutually exclusive.

Today’s so called AI, however, appears to be overwhelmingly centralised in its implementation. When you speak to “Alexa,” for example, it has no uniqueness or anything which can be described as “personality.” It doesn’t even “process” what you say to it. Rather it is nothing more than a dumb terminal which transmits your words to a centralised mainframe (or more precisely a corporate “cloud” of computers) controlled by Amazon. The same goes for Apple’s “Siri.”

Above all else, however, I find that I can no longer endorse or condone how AI is being applied—against people. This is not even AI after all! It is merely the automation and leveraging of information for purposes of wealth extraction, centralisation and control.

The term “Big Data” is also a popular technical buzzword associated with AI. It describes the technology behind the vast data stores used to feed the algorithms. But just what is all this data being stored actually about?

Well, yes, it’s invariably about people—where we go, who are friends are, where we shop, along with traits which indicate our level of education, outlook, health, emotional states and behavioural tendencies.

Here, for example, is a British consultancy firm extolling the virtue of AI in its marketing material:

This behavioral data is invaluable because if you also overlay that with AI, it’s possible to accurately predict your customer’s next actions and have the perfect campaign ready — Red Eye International Ltd

Shoshana Zuboff is a Harvard Professor who sees a different side to things. She has a name for what is happening below the level of everyday awareness. She calls it “Surveillance Capitalism.”

Surveillance capitalism unilaterally claims human experience as free raw material for translation into behavioral data. —Shoshana Zuboff, The Age of Surveillance Capitalism

When Gmail first arrived, it offered a gigabyte of email storage for everyone—for free. This represented a massive amount of storage space and, at the time, I wondered just what the business model behind it was? All we had to do in return, it seemed, was to accept that Google collected some “data” for purposes of showing more relevant ads, but otherwise it was something for nothing.

The word “data”, however, is a misnomer which disguises the true nature of that which is being taken from us. We actually need a word far more apt than mere “data,” for it is not merely a stream of abstract numbers of interest to no one except statisticians and geeks. Rather it is the flow of sovereignty and free-will from individuals to those who control the machines.

Today, I am rather of the mindset that anything “free” is not worth it—the ultimate price is too high. For, as we have experienced over recent years, things do not stop at mere behavioural prediction, but extend to “nudging”, manipulation — and ultimately far beyond toward outright control. As Zuboff writes:

It is no longer enough to automate information flows about us; the goal now is to automate us.

In an article published by The World Economic Forum, it is clear that its author sees a future in which all things are to be produced by machines powered by AI, as people are side-lined and left to focus only on “leisure, creative, and spiritual pursuits.”

With the right mindset, all societies could start to forge a new AI-driven social contract, wherein the state would capture a larger share of the return on assets, and distribute the surplus generated by AI and automation to residents. Publicly-owned machines would produce a wide range of goods and services, from generic drugs, food, clothes, and housing, to basic research, security, and transportation —Sami Mahroum, How an AI utopia would work, 2019, The World Economic Forum

Shockingly, the author promotes the idea that the only kind of work that will be needed will be that geared toward the accumulation of status and wealth, with other work previously “necessary for a dignified existence” being “all but eliminated”. If this were to come to pass, I cannot actually see any requirement for government and their stakeholders to distribute anything to mere residents as promised, given that we will no longer serve any purpose or have any say in things. Rather, I see such a future as being one in which people simply find themselves unnecessary and disposable.

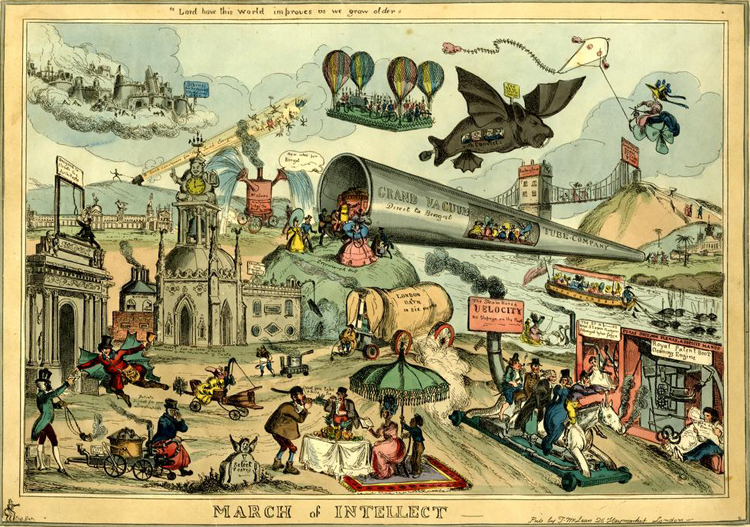

In the 19th century and early 20th, the automation of labour via mechanisation granted power to industrialists, but had severe consequences for skilled people. It has long been argued since that it was a necessary price to pay for progress. Certainly, I would not suggest that the weaving loom, for example, could or should be uninvented.

Today we face a parallel upheaval, but one which threatens our very existence, if not our souls. I rather feel that this time, however, things will have a profoundly different outcome.

The reason I claim that AI does not represent any kind of true intelligence is rather profound, and involves such things as uncertainty, information and free-will. These were not things I understood years back, at least beyond the everyday usage of the words. However, I now feel they are important as they represent the seeds of destruction for the dreams of technological utopia or, more accurately, dystopia.

The very definition of “machine” is that which transforms energy from one form to another—but it does not generate it out of nowhere. Likewise, computers transform information from one form to another, but they do not generate it. They merely reflect back information they get from elsewhere in a different form.

Minds are things which possess free-will, whereas deterministic rule-based machines do not. Human minds, like others in the animal kingdom, are not fundamentally deterministic or algorithmic in nature, but tap into uncertainty at the quantum level.[*]

In my youth, the buzzword of the day was “digital” —this was the future we were told. The very purpose of digitisation is the elimination of uncertainty by collapsing infinite possibilities granted to us by the Universe into a finite number of discrete and knowable ones. In this way, it is possible to copy digitally encoded music indefinitely, without loss of quality, with each subsequent copy being absolutely indistinguishable from the original. In an entirely digital world, however, you lose something which hardly seems to matter at the first, but it really does—the ability to bring into existence new information.

Living things, in which I would include human societies as they form out of our interactions with each other, cannot grow in isolation but must interact with and exchange information with other autonomous members in order to thrive. If you place human beings in solitary confinement, for example, we degenerate both mentally and physically over time. Likewise, without the ability to generate new information, a so-called utopian society will not be able to sustain itself but will become hollowed out and brittle. Unable to innovate and adapt to change, things will simply fall apart as entropy increases in what is essentially a closed system of strict determinism.

In short, Surveillance Capitalism or the technological utopia of the WEF have any sustainable future, but will collapse in an implosion of nothingness. This gives cause for hope, at least in the long-term, as new shoots will then be able to emerge.

I retain an interest in computers, but only when applied to meaningful endeavours. Computers should not be turned inward on ourselves in order to automate our lives and deconstruct all that we are.

Rather than accept the “free” but trivial conveniences offered by the business model of Surveillance Capitalism, or wait for the WEF’s dystopian dream to arrive, I believe that we should build a free-market business case for independent and unconnected devices, along with private computer networks with well-defined boundaries to the outside world.

[*] Roger Penrose, The Emperor’s New Mind. 1990.

Andy Thomas is a programmer, software author and writer in the north of England. He is interested in the philosophical implications of science, the nature of nature, and the things in life which hold ‘value’. You can find him on Substack: https://kuiperzone.substack.com

Follow NER on Twitter @NERIconoclast

- Like

- Digg

- Tumblr

- VKontakte

- Buffer

- Love This

- Odnoklassniki

- Meneame

- Blogger

- Amazon

- Yahoo Mail

- Gmail

- AOL

- Newsvine

- HackerNews

- Evernote

- MySpace

- Mail.ru

- Viadeo

- Line

- Comments

- SMS

- Viber

- Telegram

- Subscribe

- Facebook Messenger

- Kakao

- LiveJournal

- Yammer

- Edgar

- Fintel

- Mix

- Instapaper

- Copy Link

8 Responses

I started working as a programmer over fifty years ago. I have always considered a computer to be, to use a four letter word, a tool. Like any tool it is instrument for helping a human being achieve a goal. One of first things I was told about computers was that they were stupid: they had to be told what you wanted them to do. Nowadays it’s the opposite, computers tell us what to do.

As for apps like Siri, I soon lost interest in it when I realised its only useful feature was as a front end for Google.

Yes, AI is a (dangerous) modern myth. Andy Thomas’ essay on the subject is a timely reminder that AI has been the locus of cavalier and absurdly over-hyped expectations. There are many thoughtful experts within IT who are deeply sceptical about the possibility that AI can grow and grow… and then eventually overtake the human brain in intelligence, the so-called ‘singularity’. But in spite of this body of serious, informed doubt, momentum in the media seems to be, perversely, with those yesbodies in IT who have succumbed to the illusion of AI, and have ended-up swallowing their own hype and propaganda.

This notion that AI may eventually overtake and thereby subjugate the human mind is a particularly deadly source of doom, pessimism and dystopia in today’s none-too-confident world. It is arguable that it should be banned from IT platforms, because it can push nervous people towards the suicidal.

The case against AI begins by pointing out that ‘Artificial Intelligence’ is a brazenly self-awarded application of the word ‘intelligence’. This is a word which has been used historically to connote a high level of cognitive achievement, and by co-opting this high-status word, the computer sector implies that its neural networks have reached this level of high cognitive achievement.

Of course they haven’t. Today’s AI exposes its own limitations when it provides off-the-cuff sub-titles on TV. The result is typically —ludicrous gaffes and hilarious mis-interpretations every second line.

A better term for what neural networks actually do would be ‘basic comprehension’. It is a useful development, but far less than a sign of anything like ‘intelligence’.

Neural networks operate on a simplistic, raw interpretation of ‘empiricism’ which assumes that minds operate by spotting pattern. But some apparent patterns are misleading and inappropriate. This is the fallacy of numerology. Patterns need to be approached critically, not naively… like AI-driven cars which were dangerously flummoxed by some Wag who had taken a paint brush to a 30-mile an hour road sign and turned it into an ‘80’. The whole history of science is a story of minds being challenged by the emergence of strange patterns, and going on to try to integrate these strange patterns by imaginative theory-building —eventually melding them smoothly into our familiar fabric of expectations.

A recent TV programme on AI showed a person at a street corner in Paris. The commentator pointed out that to expect a computer to take-into-account the full meaning and the implications of everything that person could see around her (people, dogs, cars, bikes, signs, shops, ads, appartments…) was thousands of times greater than anything that computers could ever be expected to do. And even if such a huge body of information could be marshalled, the laws of complexity would kick-in (with information overload), thus preventing any kind of sensible conclusion from emerging.

Thanks Chris,

I’ve been thinking about this so called “Singularity”; the idea that machines will design better machines by purely mechanical means. The question I would ask is where, in the designing, does the information come from?

Good article Andy. Some time back I read an article about AI software creating new literature and artwork, after being ‘fed’ many examples of each, which experts in each respective field could not tell apart from those produced by people (a kind of update of the Turing test). In essence, the article implied the AI created something new after ‘seeing’ many examples, in much the same way our brains process information to create something new. What’s your opinion on that?

Hi Nikos,

You can try AI image generator for yourself here (try NightCafe):

https://www.unite.ai/10-best-ai-art-generators/

I was contemplating on this some 20 years ago, when the ideas were being discussed. I was enthusiastic about it at the time, I recall.

Today, however, I have a different outlook. I don’t think these things are generating information, but merely recycling existing information they have sucked in from the web. The really profound question in this regard is just what is “information” and how is it generated?

PS. Also see this short movie — it was written by AI, but acted by humans.

https://www.youtube.com/watch?v=LY7x2Ihqjmc&t=4s

This behavioral data is invaluable because if you also overlay that with AI, it’s possible to accurately predict your customer’s next actions and have the perfect campaign ready — Red Eye International Ltd.

https://geeksnation.net/best-websites-to-read-manga-free-online

Thanks for your post.

Unreal Person is a AI image generator that is trained on billions of human faces to generate a brand new face that does not exist.

The faces are the result of AI generated images, thus you can make sure that this person does not exist.

https://www.unrealperson.com